Application Development

5 Reasons to Use Vectara’s LangChain Integration

Why Vectara is better for enterprise-scale LangChain applications

June 15 , 2023 by Ofer Mendelevitch

Introduction

LangChain makes building LLM-powered applications relatively easy, as our good friend Waleed at Anyscale was quick to observe.

In our shared blog post about Vectara’s integration with LangChain, we looked at a typical question-answering application over a single text document using LangChain and Vectara.

Now imagine how you might want to scale this simple application from ingesting a single document to an enterprise-scale GenAI application that can handle millions of documents and a large number of queries – all while maintaining high accuracy, low cost and latency, and ensuring privacy and security of your data.

Turns out it requires more work than one might think. There are non-trivial hurdles along the way like data security, data privacy, scalability and cost, and many other compliance-related IT requirements.

Why use Vectara with LangChain?

Vectara was designed to simplify building GenAI applications for the enterprise, and in this blog post, we will discuss five ways in which Vectara can ease the transition of your LangChain application from a quick and simple demo to enterprise-scale.

Reason 1: Getting the Most Relevant Facts

One of the most important capabilities for “Grounded Generation” is to “get your facts right” – any retrieval augmented generation engine is highly dependent on the facts (document segments) that are provided to the summarization phase. If you get the wrong facts, the response won’t be accurate and your application significantly degrades in performance.

When building a GenAI application by composing (or chaining) most of the components individually, it’s actually quite easy to miss small details in this retrieval step that may result in reduced performance. Let’s look at a few of these details.

Using a Dual Encoder

In the retrieval step, it’s important to use embeddings that ensure optimal retrieval for question-answering. One key reason for this is that you want the embeddings to differentiate between questions and answers.

Imagine for example the case where you ask “what is the tallest mountain in the world?” and that same exact text is within one of the documents you have in the index. In that case “what is the tallest mountain in the world?” would be returned as the most relevant match, since the vectors in embedding space are exactly the same. What you want instead is for the query engine to return matching text that matches the intent of the question, but not the question itself.

Vectara’s embeddings engine, a state-of-the-art dual encoder (built by our founder Amin, who was part of the early research team for neural search at Google), is designed to address exactly this issue, and is optimized for retrieval-augmented-generation use cases.

Hybrid Search

Neural search provides a whole new level of search relevance and powers our ability to retrieve just the right facts for summarization. But neural search is not perfect, and there are cases where traditional keyword-style approaches are still valuable, especially in “out of domain” search and when the intent of the user is very specific (e.g. when they are looking for a specific product or SKU). This is the main motivation for Hybrid Search, an approach that combines both neural search and keyword-style approaches into a retrieval mechanism that gets you the best of both worlds.

Implementing hybrid search with LangChain alone can be tricky: you have to generate two types of embeddings (dense and sparse), combine them together when indexing documents in your vector database and use it in precisely the right way when submitting a query.

Vectara implements hybrid search as an integral part of the API, and takes care of all these details for you. You just have to submit the query and you get highly accurate search results. This alone is a great reason to use Vectara along with LangChain, but let’s look at some other reasons.

Reason 2: Vector Database Reindexing

Indices created by vector database products will often degrade in performance over time, due to shifts in distribution of the embedding vectors. Although it’s possible to reindex, it’s often a non-trivial task, and takes a lot of expertise to do right. Furthermore, it’s often unclear when to reindex and somewhat difficult to truly understand the implications this has on performance.

At Vectara we invest a lot of effort in developing an integrated solution that continuously optimizes its performance for our customers to achieve the best results.

This all happens behind the scenes, and in your LangChain application you don’t have to manage this complex aspect of retrieval – the Vectara platform does that for you to ensure optimal results every time.

Reason 3: Data Security

A critical consideration for enterprises is the security and privacy of their data assets, and specifically encryption at rest and in transit.

When building your application with LangChain, you have a lot of flexibility but more often than not your application depends on multiple endpoints, each managed by a different vendor with their own privacy/security implementation. As the capabilities and policies of the various vendors and products change, it may be difficult to fully understand the implications for your security posture, and easy to miss a detail in the interface between the various endpoints and systems.

An additional consideration is support for customer-managed keys (also known as CMK), a strategy used by SaaS providers to allow customers to manage their own encryption keys. CMK provides an additional layer of security, and in some cases is mandated by regulation or by a company’s security policies.

Vectara fully supports customer-managed keys, which makes it easy for you to ensure your GenAI application is compatible with your internal IT security policies.

Reason 4: Data Privacy

A lot has been said about privacy of data with LLMs, and there is certainly a concern amongst enterprise users regarding the use of their proprietary data. Specifically, the question people ask the most is: “Would my data remain mine, or will you use it to train your future models?”

At Vectara we maintain complete separation between the data we train our models on and customer data – we NEVER train our public models on customer data (including prompts). That means you don’t have to worry about data privacy leaks.

Reason 5: Latency and Cost

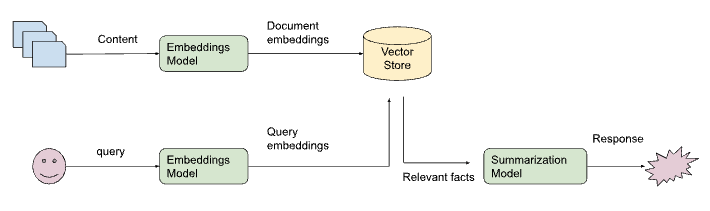

When a user issues a query to a chain in LangChain, there are multiple steps before the response is available to the user: calculating embeddings, comparing the embeddings to document embeddings (in the vector store) to retrieve the facts, and finally calling the summarization model.

Figure 1: Grounded Generation process with LangChain.

This can pose two challenges when deployed at scale.

First, the overall latency of the application chain is the sum of latencies across each component. So if any of the pieces have longer latency for a given query, this can negatively impact the overall user experience.

Second, each of the steps in the chain has a certain cost associated with it, and those can ramp up pretty quickly.

For example, let’s do some back-of-the-envelope math. Our assumptions are:

- 1M documents, each document with 30 sentences on average that require an embedding, resulting in about 1,500 tokens per document (here we assume an average of 50 tokens per sentence).

- 100K queries a month, each query averaging 1,000 tokens sent to summarization, including the prompt, the query and 5 relevant segments or facts from the document (a segment can be one or more sentences).

- Let’s assume Pincone as the vector DB: since we have 1M documents and each is about 30 sentences (vectors) we have a total of 30M vectors, which translates into a requirement for about 5 pinecone pods.

With these assumptions, we can now calculate the cost as follows:

| Embeddings (Ada) | $0.0004 for 1K tokens $800 to create embeddings for 1M documents |

| LLM (GPT4) | $0.06 for 1K token $6000/month |

| Vector DB (pinecone) | 5 x AWS p2/x8 pod: $1.9980/hour $7175/month |

That comes to $13,175/month in addition to the startup cost for creating the embeddings, which is $800.

And as pointed out in the article all languages are not created equal, in some non-English languages the same sentence can result in up to 9-10x more tokens than English, causing an even further increase in costs.

When you use Vectara for creating your GenAI application, the latency is optimized across multiple steps and we provide a transparent, unified billing model based on the size of the index and the number of queries (regardless of the language your customers use). As a result, the cost with Vectara in most cases would be substantially smaller, in most cases 10% or less, so instead of a nearly $14k monthly bill, with Vectara you are looking at only a few hundreds of dollars.

Summary

Since early 2023 AI innovation has been moving at a breakneck speed. We are excited to be part of this evolving stack and enable developers to build LLM-powered GenAI applications with Grounded Generation.

As enterprises move from evaluation to deployment of new LLM-powered AI applications, Vectara provides a quick and easy path to scale LangChain applications to the enterprise.

As more advanced applications like goal-seeking agents on LangChain mature, and become good enough to deploy in the real world, the LangChain + Vectara integration can also help scale those applications.

If you’d like to experience the benefits of Vectara + LangChain firsthand, you can sign up for a free Vectara account.