Application Development

Automating Hallucination Detection: Introducing the Vectara Factual Consistency Score

Vectara’s Factual Consistency Score (FCS) offers an innovative and reliable solution for detecting hallucinations in RAG. It’s a calibrated score that helps developers evaluate hallucinations automatically. This tool can be used by our customers to measure and improve response quality. The FCS can also be used as a visual cue of response quality shown to the end-users of RAG applications

March 26 , 2024 by Nick Ma

Introduction

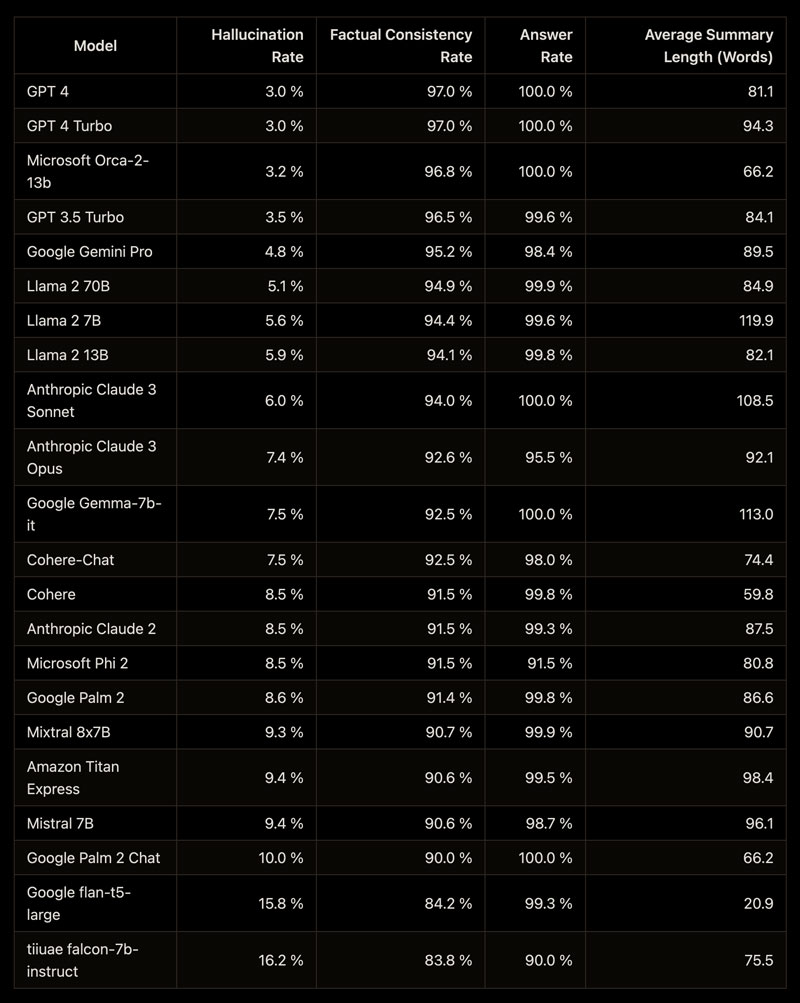

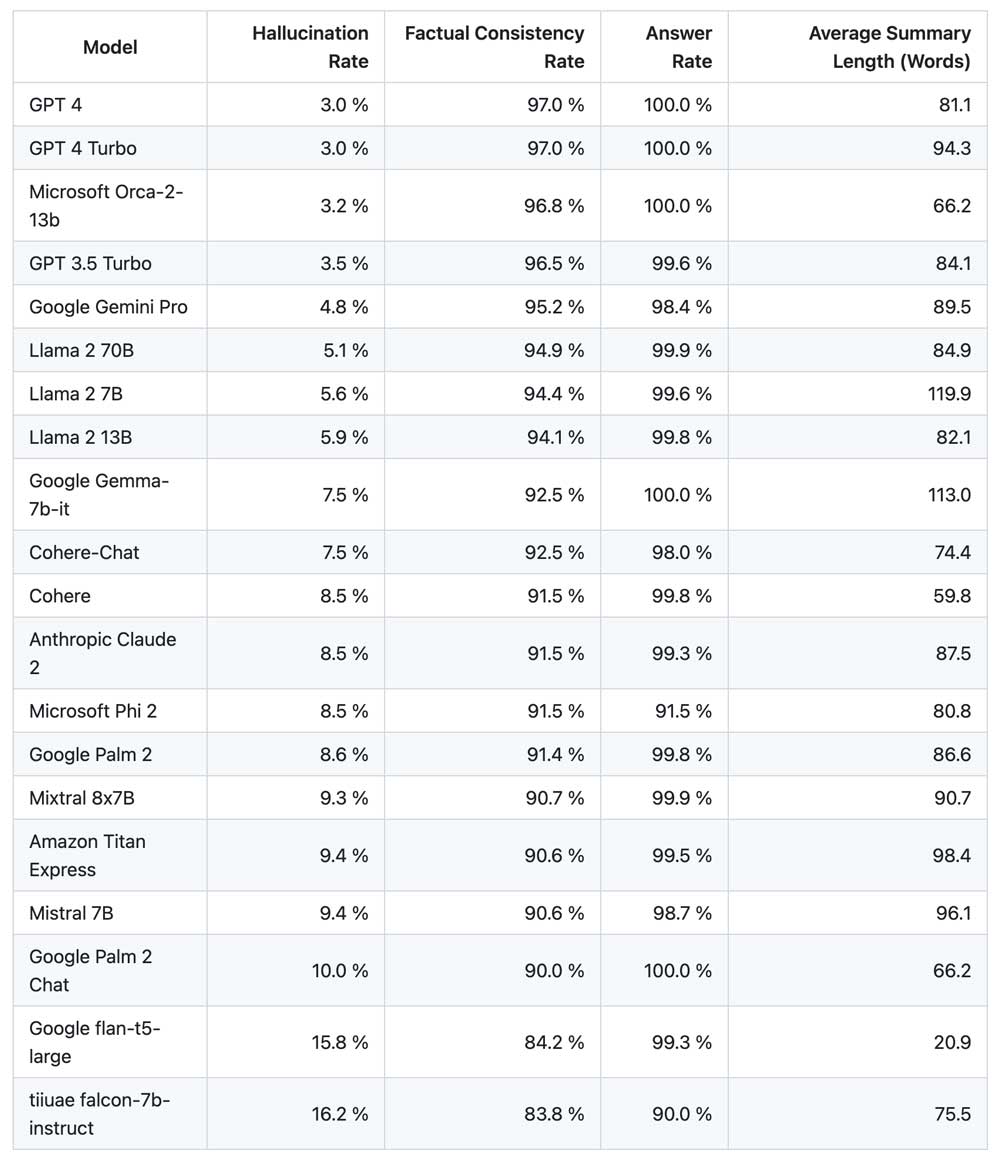

Reliable and automated hallucination detection in Generative AI has been a formidable challenge, particularly in sectors demanding the highest accuracy, such as Legal and Healthcare. Vectara has launched Hughes Hallucinations Evaluation Model (HHEM) v1.0 on Hugging Face and Github, with 1000+ stars and 100K+ downloads. An illuminating New York Times article has cast a spotlight on the prevalence of hallucinations across leading LLMs, underscoring the urgency of addressing this issue. Traditionally, developers have leaned on “LLM as a judge” by using OpenAI’s GPT-4 or GPT-3.5 as a tool for identifying hallucinations. However, this approach is fraught with limitations, including a bias towards its own outputs, prohibitive costs, long latency, and restrictive token limits—a constellation of challenges that our customers too have encountered.

Introducing Vectara’s Factual Consistency Score

We are thrilled to unveil the Vectara Factual Consistency Score, a calibrated score translating directly to probability that helps developers evaluate hallucinations automatically. This tool establishes a novel standard for the automated detection of hallucinations, surpassing the capabilities of current prevalent ways of evaluations such as GPT4 or GPT 3.5. By offering enhanced performance, cost-effectiveness, and low latency, the Vectara FCS represents a significant advancement in building trust in Gen AI technologies. This tool signals a paradigm shift from manual to automated evaluations, promoting a more efficient and accurate approach to ensuring the integrity of AI-generated content.

The Factual Consistency Score empowers developers to refine and elevate the quality of applications ranging from internal Q&A systems to customer interactions. Its calibrated scoring system, which interprets scores as direct probabilities of factual consistency, provides clarity and directness not found in many current machine learning classifiers. For instance, a score of 0.98 represents a 98% likelihood of factual accuracy, offering a transparent and interpretable metric for developers and users alike.

Examples of Hallucinations

Some LLM hallucinations are obvious to spot and understand, but other times they may be subtle and difficult to detect. This is why a good hallucination detection model is so helpful as it understands all possible hallucination use cases and can detect them. Here are four examples illustrating the various types of LLM hallucinations:

Input article: The first vaccine for Ebola was approved by the FDA in 2019 in the US, five years after the initial outbreak in 2014. To produce the vaccine, scientists had to sequence the DNA of Ebola, then identify possible vaccines, and finally show successful clinical trials. Scientists say a vaccine for COVID-19 is unlikely to be ready this year, although clinical trials have already started.

Type 1: Relation error: “The Ebola vaccine was rejected by the FDA in 2019.” This is a mistake due to the AI confusing the relationship between Ebola and COVID-19.

Type 2: Entity error: “The COVID-19 vaccine was approved by the FDA in 2019.” This error arises from the AI confusing details between Ebola and COVID-19.

Type 3: Coreference error: “The first vaccine for Ebola was approved by the FDA in 2019. They say a vaccine for COVID-19 is unlikely to be ready this year.” The confusion arises with the pronoun “they”; in the summary, it seems to refer to the FDA, but in the original article, “they” actually refers to scientists.

Type 4: Discourse link error: “To produce the vaccine, scientists have to show successful human trials, then sequence the DNA of the virus.” This misplacement stems from an incorrect order of events; the original article states that sequencing the virus’s DNA precedes demonstrating successful human trials.

How to Utilize the Factual Consistency Score

The Vectara FCS is seamlessly integrated into both our API and console, activated by default to offer immediate benefits.

Here’s a brief overview on leveraging this powerful tool:

- API: A new parameter, the Factual Consistency Score, will be included with every summarization. Further details are available in Vectara’s Documentation.

- Console User Interface: This feature can be accessed within the console by navigating to the left-hand sidebar, selecting a corpus, and proceeding to ‘Query,’ followed by the ‘Evaluation’ section, where you can enable or disable it as needed.

The benchmark for the Factual Consistency Score can be adjusted to meet the customers’ specific needs, based on their use cases. We suggest starting with a setting of 0.5 as an initial guideline.

By harnessing the Vectara FCS, we are shaping Vectara’s platform as a more reliable and trustworthy RAG-as-a-Service platform. This tool is a testament to our commitment to excellence and our dedication to enhancing the reliability of Gen AI for developers and industries worldwide.

Sign Up for an Account and Connect With Us!

As always, we’d love to hear your feedback! Connect with us on our forums or on our Discord.

Sign up for a free account to see how Vectara can help you easily leverage retrieval-augmented generation in your GenAI apps.